Review Articles

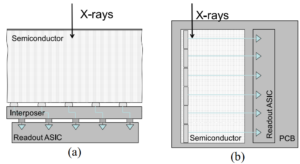

Photon Counting Detectors for X-Ray Imaging With Emphasis on CT

R. Ballabriga, J. Alozy, F. N. Bandi, M. Campbell, N. Egidos, J. M. Fernandez-Tenllado, E. H. M. Heijne, I. Kremastiotis, X. Llopart, B. J. Madsen, D. Pennicard, V. Sriskaran, and L. Tlustos

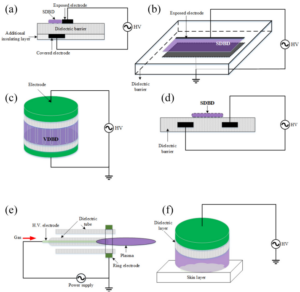

A Review of Dielectric Barrier Discharge Cold Atmospheric Plasma for Surface Sterilization and Decontamination

Kolawole Adesina, Ta-Chun Lin, Yue-Wern Huang, Marek Locmelis and Daoru Han

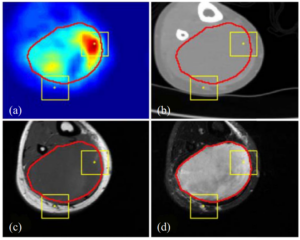

Systematic Review on Learning-Based Spectral CT

Alexandre Bousse, Venkata Sai Sundar Kandarpa , Simon Rit, Alessandro Perelli, Mengzhou Li, Guobao Wang, Jian Zhou and Ge Wang

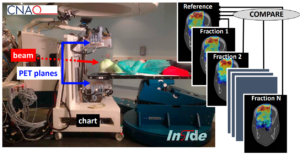

Technological Developments and Future Perspectives in Particle Therapy: A Topical Review

Aafke Christine Kraan, Alberto Del Guerra

Featured Articles

Total Body PET: Why, How, What for?

Suleman Surti, Austin R. Pantel and Joel S. Karp

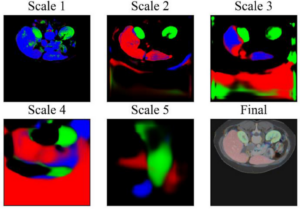

Current and Emerging Trends in Medical Image Segmentation With Deep Learning

Pierre-Henri Conze, Gustavo Andrade-Miranda, Vivek Kumar Singh, Vincent Jaouen, Dimitris Visvikis

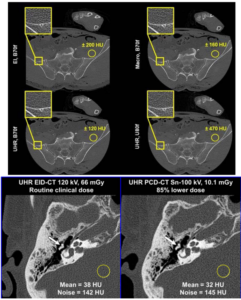

Photon Counting CT: Clinical Applications and Future Developments

Scott S. Hsie, Shuai Leng, Kishore Rajendran, Shengzhen Tao, Cynthia H. McCollough

DefED-Net: Deformable Encoder-Decoder Network for Liver and Liver Tumor Segmentation

Tao Lei, Risheng Wang, Yuxiao Zhang, Yong Wan, Chang Liu, Asoke K. Nandi

Most Read Articles

Deep Learning-Based Image Segmentation on Multimodal Medical Imaging

Zhe Guo, Xiang Li, Heng Huang, Ning Guo and Quanzheng Li

On Interpretability of Artificial Neural Networks: A Survey

Feng-Lei Fan, Jinjun Xiong, Mengzhou Li, Ge Wang

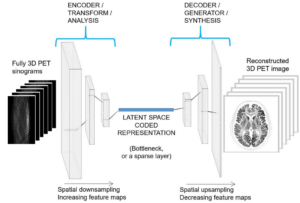

Deep Learning for PET Image Reconstruction

Andrew J. Reader, Guillaume Corda, Abolfazl Mehranian, Casper da Costa-Luis, Sam Ellis, Julia A. Schnabel

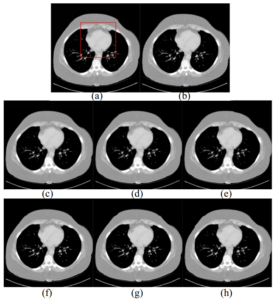

Deep-Neural-Network-Based Sinogram Synthesis for Sparse-View CT Image Reconstruction

Hoyeon Lee, Jongha Lee, Hyeongseok Kim, Byungchul Cho, Seungryong Cho